VisionLib Release 20.10.1 is now available at visionlib.com.

This is a major update for VisionLib, which adds ARFoundation support, a true highlight for Unity development. Additionally, we’ve updated the VLTrackingConfiguration component, making it now even easier and more consistent for developers to configure tracking setups.

The new Plane Constrained Mode improves performance, if the tracked object is located on a flat surface. For enterprise use, we’ve added support for industrial uEye cameras. Read on.

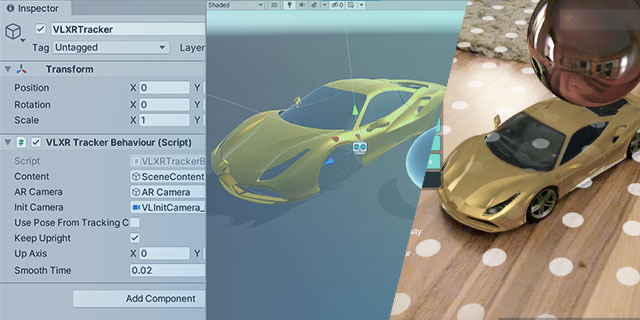

The new release brings ARFoundation support, which enables to get full access to the ARKit / ARCore session while using VisionLib. Developers can now benefit from using our Model Tracking together with highlight functionalities such as: plane detection, light estimation, or environment probes to blend content even more seamlessly with reality.

We’ve added the ARFoundationModelTracking example scene to the Experimental Package, which you can download from the customer area. While ARFoundation integration to VisionLib is beta, we’re happy for feedback that helps to improve its support.

VLTrackingConfiguration

Since its introduction in Release 20.3.1, the VLTrackingConfiguration component has eased configuring VisionLib projects, as it introduced a centralized place to reference tracking related information.

Besides useful developer options to autostart tracking or to choose the input source at runtime, we’ve added even more options: now you can set the tracking configuration, the license, and calibration data all in one place. Either by dragging & dropping the assets into the corresponding field, or by using URI strings (with the possibility to use schemes and queries).

Plane Constrained Mode

With this new feature you can improve performance, when the tracked object is located on a flat surface and while tracking is extended by SLAM. This is especially useful for objects that stand on a ground or table. The tracking will then exclude tilted positions that such objects can’t take. As long as this is enabled, tracking will only try to find poses that align the model’s up vector with the world’s up vector.

To enable/disable the Plane Constrained Mode, we’ve added a checkbox to the AdvancedModelTracking scene, and voice commands to the HoloLens example scenes, for demonstration purposes.

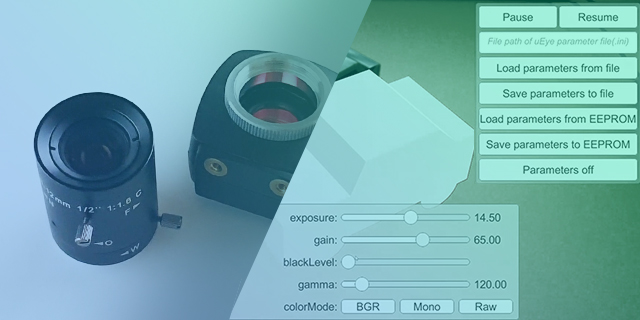

VisionLib ever since has a growing base of industrial users. The new SDK now supports IDS uEye devices as one type of high-end industrial cameras, in order to meet quality and accuracy demands such as in automated manufacturing, inspection, and robotics. Using VisionLib together with uEye may be particularly useful to you if your use case is characterized by some of the following requirements:

The new release comes with many more updates and bugfixes. Here an overview on the most relevant ones:

We’re proud to introduce our all new Blog pages: Here, we’ll inform about minor & major updates and let you keep track on upcoming & past event – such as our joint talk with SAP on Object Tracking, Smart Factories & the XR Cloud, from VR/AR Global summit in September.

Stay Updated on Social Media – follow us on LinkedIn, Twitter, and have a look on our YouTube channel: We share insights into current developments, present upcoming features, and share exciting work, that partners & customers have created using VisionLib.

Stay healthy and stay tuned.

Have you seen our latest #tech videos? See how VisionLib enables to track & check pieces while working.

We help you apply Augmented- and Mixed Reality in your project, on your platform, or within enterprise solutions. Our online documentation and Video Tutorials grow continuously and deliver compresensive insights into VisionLib development.

Check out these new articles: Whether you’re a pro or a starter, our new documentation articles on understanding tracking and debugging options will help you get a clearer view on VisionLib and Model Tracking.

For troubleshooting, lookup the FAQ or write us a message at request@visionlib.com.

VisionLib is a product by Visometry. Learn more about its makers, sales-, partner and job opportunities at visometry.com.

© 2024 Visometry GmbH all rights reserved.